Security and Observability for Cloud Native Platforms Part 1

This article comprises three parts. We first introduce what a cloud native platform is with a deep dive into Kubernetes (K8s), which is the most popular open-source solution to container orchestration. Then, we discuss the threat landscape and overall security framework for mitigating the corresponding risks. The last part of the article focuses on monitoring and observability using the extended Berkeley Packet Filter (eBPF) technology.

We hope this article will help you understand how security and observability for Cloud Native Platforms vary from traditional deployments. The embedded links in the article lead you to insightful definitions and guides to help you design and implement your security and observability strategy, particularly if you are adopting Open vRAN.

In a K8s cluster, the deployment of an application or network microservice (in the case of Open vRAN, for example) comprises the build, deploy and runtime stages, and you need to properly address security and observability at all stages.

The operation team, the platform team, the networking team, the security team, and the compliance team are the teams in charge of the seamless deployment and operation of the applications and network microservices. Only a strong collaboration between these teams will ensure an efficient and effective DevSecOps, as security and observability are a shared responsibility of all the teams involved, as not a single organization can mitigate all current and future risks alone.

Cloud Native Platforms

Let’s start with a few definitions taken from The Cloud Native Computing Foundation:

Cloud-native architecture and technologies are an approach to build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure, and declarative APIs exemplify this methodology.

Kubernetes, also known as K8s, is an open-source distributed operating system for container orchestration, i.e., for automating deployment, scaling, and management of containerized applications. Kubernetes is the pilot (from Greek) of a ship of Docker containers.

A cloud native network function (CNF) is a network function designed and implemented to run inside containers. CNFs inherit all cloud-native architectural and operational principles, including K8s lifecycle management, agility, resilience, and observability. For example, in Open vRAN, the disaggregated architecture and containerized approach to run virtual centralized unit (vCU) and distributed unit (vDU) – designed to be easily installed and to interoperate with existing system components – on commercial off-the-shelf (COTS) hardware bring many benefits to carriers, particularly in terms of total cost of ownership (TOC), automation, and innovation.

A Pod is the smallest deployable object in Kubernetes which represents a single instance of a running process in a cluster. Pods may contain one or more containers, such as Docker containers. When a Pod runs multiple containers, the containers share the Pod’s resources and are managed as a single entity.

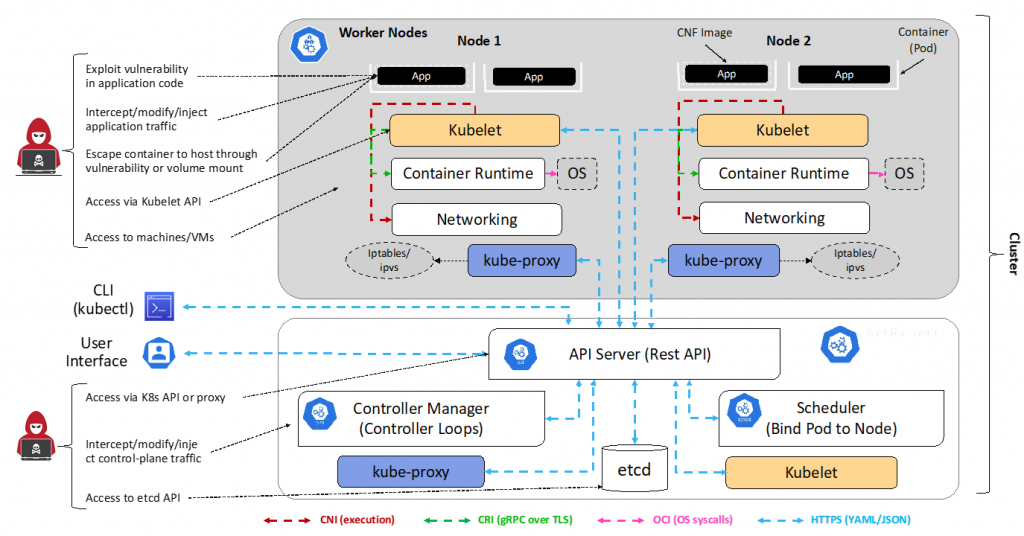

Kubernetes coordinates a highly available cluster of computers that are connected to work as a single unit and automates the distribution and scheduling of application containers across a cluster in a more efficient way. A Kubernetes cluster consists of two types of resources:

- A Master Node on the control plane that coordinates the cluster.

- Worker Nodes that run applications (or network microservices).

The control plane (CP) runs various server and manager processes for the cluster. When building a cluster using kubeadm, the kubelet process is managed by systemd. Once running, it starts every pod found in /etc/kubernetes/manifests/.

The kubelet systemd process handles changes and configuration on worker nodes. It accepts the API calls for Pod specifications (a PodSpec is a JSON or YAML file that describes a pod) and configures the local node until the specification has been met. Should a Pod require access to storage, Secrets or ConfigMaps, kubelet will ensure access or creation. It also sends back status to the API Server for eventual persistence.

Now, let’s familiarize with the main Kubernetes components of the control plane node.

Kubernetes API Server

The API Server handles all internal and external calls (traffic), accepts, and controls all the actions, including access to the etcd database. It authenticates and configures data for API objects, and services REST operations. In short, the API Server is the controller for the entire cluster, and works as a frontend of the cluster’s shared state. The worker nodes communicate with the control plane employing the Kubernetes API, which the API Server exposes.

Kubernetes Scheduler

The scheduler determines which node will host a Pod of containers based on the available resources (such as volumes) to bind, and then tries and retries to deploy the Pod based on availability and success. More information about kube-scheduler can be found on GitHub.

etcd Database

The etcd database contains the state of the cluster, networking, and other persistent information, such as dynamic encryption keys and secrets, as discussed later in the article. It accessible through curl and other HTTP libraries and provides reliable watch queries. Since it keeps the persistent state of the entire cluster, etcd must be protected and secured, including backups.

Controller Manager

This process plays the role of a control loop daemon which interacts with the API Server to determine the state of the cluster. If the current state does not match the desired one, the manager will take all necessary actions to match the latter. As explained in the following sections, there are several operators in use, such as endpoints, namespace, and replica.

Other Kubernetes Components

Other important components of Kubernetes are:

- Operators: controllers or watch-loops. An operator is an agent, or informer, and a downstream store. Examples of operators are endpoints, namespaces, and serviceaccounts, which manage the eponymous resources for Pods. Deployments manage replicaSets, which manage Pods running the same podSpec, or replicas.

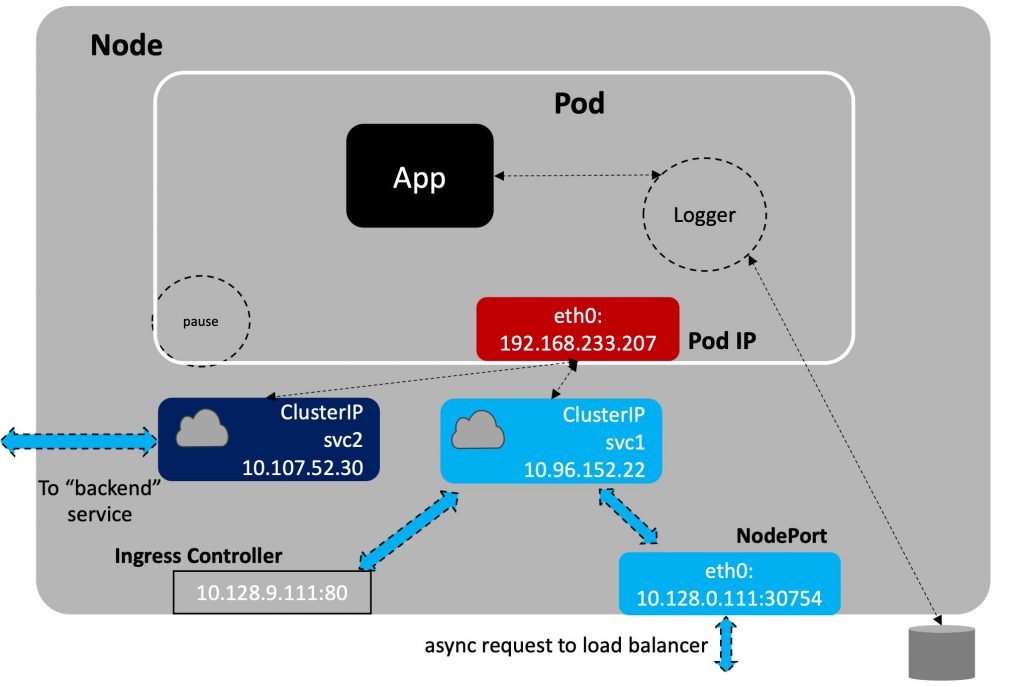

- Services: it is an operator which listens to the endpoint operator to provide a persistent IP for Pods, which have ephemeral IP addresses chosen from a pool. It sends messages via the API Server to kube-proxy on every node, as well as the network plugin such as calico-kube-controllers. A service also connects Pods together; exposes Pods to Internet; decouples settings; and handles Pod access policy for inbound requests, useful for resource control, as well as for security.

- Namespaces: mechanism for isolating groups of resources within a single cluster).

- Quotas: provides constraints that limit aggregate resource consumption per namespace.

- Network and policies: application-centric constructs thst allow you to specify how a pod may communicate with various network “entities” over the network.

- Storage: volumes, persistent volumes, node-specific volume limits, etc.

An example of Service Network is illustrated in the graphic below, where there is a pod with a primary container (App) with an optional sidecar Logger. The pause container is used for reserving the IP address in the namespace prior to starting the other pods. The figure also shows a ClusterIP, which “exposes” the deployed App for connecting inside the cluster, not the IP of the cluster. The ClusterIP can be used to connect to a NodePort for outside the cluster, an IngressController or proxy, or another “backend” pod or pods.

Cloud Native Platforms

Cloud native platforms, such as Symworld, enable the design, constructing, and operating workloads that are built in the cloud (private, hybrid or public) and take full advantage of the cloud computing model.

At the same time, the new architecture, emerging and disruptive technologies, such as virtualization, disaggregation, automation, and intelligence, bring inevitable changes to the threat landscape by introducing new security challenges, which need to be seriously considered and potential risks (threats*vulnerabilities) to disruption and hostile interference need to be mitigated.

Here are some suggestions, by no means exhaustive, on how to improve the security posture of cloud-native platforms deploying appropriate safeguards and best practices, which allow the reduction of exposure to potential cyber threats and timely responses to intrinsic vulnerability coming along with the introduction of the new technologies.

The CNCF provides a list of essential tests for obtaining a CNF Certification. The Security Tests give a general overview of what each test does, a link to the test code for that test, and links to additional information when relevant/available.